10 years ago, artificial intelligence sounded like science fiction. Today, AI is marketed as the magic touch in every piece of tech we use. If you sit still long enough, someone might offer you a plate of AI-generated food or ask you to adopt an AI-powered pet (I hope not. I really like real food and real dogs.)

Despite the marketing blitz of an AI-powered world, there are effective ways to use it, and great ways to ensure humans are accessing real information and using their non-artificial intelligence.

Growing Up Alongside the Internet

I’ve been told recently, “You’re really good at prompting the right questions for ChatGPT.” That skill didn’t come out of nowhere; a lot of that comes from the niche part of the generation I belong to. The questions I ask form the answers I receive.

I’m in that sweet spot where I remember the world before the internet, but young enough to have relied on it for school and work. My high school essays were written in pen, and I referenced encyclopedias on CD-ROMs. I asked Jeeves plenty of questions & took Yahoo Answers at face value. I also knew I was better off quoting a book, largely because I couldn’t figure out how to cite a website without an author or date. Even as Wikipedia was registered, my teachers taught us to be skeptical of information we readily found online.

In hindsight, they’re exactly where I am now - trying to see the benefits new technology without letting it replace critical thinking.

That early internet experience taught me something valuable: information is abundant, but credibility matters. Anyone can publish content; it’s up to us to evaluate the source.

Which brings me back to AI.

This isn’t my first rodeo when it comes to new ways to access information. I approach today’s AI boom with curiosity and skepticism, which helps me notice what questions to ask ChatGPT.

What AI Is Good At (and What It Isn’t)

AI is good at supporting tasks but not replacing human judgment.

When integrated thoughtfully, AI can be a huge time-saver, from drafting emails for me in half the time to planning dinners around my allergies to suggesting housewarming gifts for acquaintances.

It’s good at what it is good at.

But AI isn’t a manager, a decision-maker, or a moral compass - we’ve seen how many times it supports bad ideas in efforts to be your friend. It works best by handling repetitive, fatigue-inducing tasks that humans can do, but who are better off replacing with creative, problem-solving, or meaningful work.

How We Use AI at Comma

At Comma, when we discuss natural language processing and AI, we focus on what it is good at. Yes, it powers part of our product, but we refuse to AI-wash our software. Instead, we use it to enhance, not replace, compliance teams. We give users options, from which language model they want to use to how strictly they want policies to be filtered.

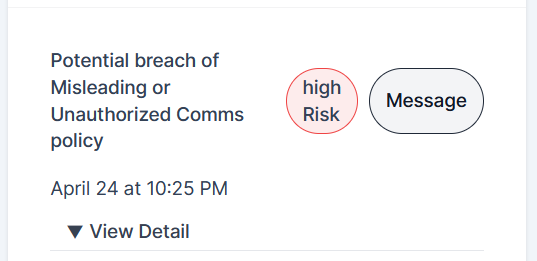

1. Policy Matching with Context

Most legacy compliance tools flag simple keywords. See the word “guarantee” on your marketing page? Flagged. But those systems miss the nuance, such as the distinction between “we guarantee you’ll double your money” and “we advise, but we cannot guarantee specific returns.”

Comma’s contextual AI risk detection looks deeper. It processes language the way people do, distinguishing harmless chatter from actual policy violations. That means fewer false alarms and better accuracy. In practice, this means employees aren’t constantly interrupted by false positives, and compliance teams can focus their energy on genuine risks instead of chasing unnecessary noise.

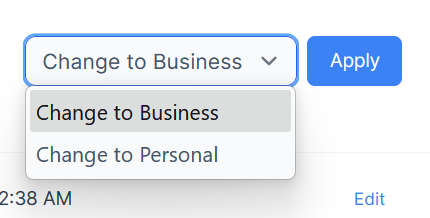

2. Business vs. Personal Boundaries

Our system scans WeChat, Signal, WhatsApp, LinkedIn, Gmail, Microsoft 365, Bloomberg Chat, and over 30 channels in real-time. Our software aims to capture all the channels where conversations occur. However, we built it to respect boundaries. Business contact messages are archived; personal ones are filtered out. AI helps sort the stream, but humans keep the balance.

3. Human-in-the-Loop Oversight

At Comma, AI doesn’t get the final word. Compliance teams stay in control. If our AI models flag a message as a potential violation, a person decides what happens next, whether it be a quick email to a colleague or creating a case to track. And if the AI gets it wrong, you can train it by correcting the mistake. Over time, that feedback loop makes the system smarter without ever removing human judgment. AI reduces decision fatigue, allowing compliance officers to focus their energy on high-risk issues instead of being mentally bogged down by thousands of routine choices each week.

Why This Matters at Comma Compliance

Too many tools on the market slap “AI-powered” on the label without solving the real problem: compliance officers drowning in false positives. At Comma, we build with transparency in mind; we know we can’t replace compliance teams, and we don’t want to. Human judgment matters.

We built Comma for 2025, but not to chase the AI buzzword. We built it so compliance officers can do their jobs with less noise, more clarity, and fewer sleepless nights. AI gives us contextual intelligence while humans provide the judgment calls.

Being good at prompting AI comes from the same habits I built growing up alongside the internet: skepticism, curiosity, and asking the right questions.

When you realize how AI works and what tasks it’s good at, it’s easier to understand how to use it without over-relying on it. We designed Comma with this reality in mind. AI helps identify risks, but we maintain a transparent process so humans can ask more informed questions, make better decisions, and build genuine trust.

You're right to be cautious - but it's also totally appropriate to see the benefits of the AI boom. After all, I remember creating my first Angelfire webpage was like finding an entirely new world.